By Aswathy Jayachandran and Ramnik Kaur

Introduction

As part of our dedication to improving engineering best practices, LiveRamp has implemented automated continuous integration of LiveRamp services to enable developers to deploy high-quality code with high velocity. The focus is to qualify builds at each stage of automated testing, promote them to the next stage, and deploy only trusted containers to production, thus enforcing qualified build delivery and deploy-time security.

Challenges

Before we started the continuous integration with quality gates practice at LiveRamp, we had these problems:

-

- Both good and bad builds were deployed to production

- High customer impact due to deployments without proper testing

- Deployment and verification of deployed components wase done manually and resulted in human error

Goals

To accelerate the deployment process without human intervention such that the failed tests/stages will prevent a bad build from getting deployed to production. Furthermore, our solution should support the below requirements:

- Release candidates should be finalized based on automated quality gates

- Only qualified release candidates can be deployed to production

- Qualified release candidates should be auto-deployed to production and should have required post-deployment monitoring

Solution: promotional model design

We have designed a promotional model using Jenkins through which qualified builds can be promoted to different deployment environments based on the defined quality gates. Also, failure of any stage in the promotional model will result in rolling back to the previous stable version of the service.

Promotional Model Workflow using Jenkins:

-

Promote as functionally ready and deploy to QA environment

-

-

- The Jenkins pipeline starts with generating the build and Kube manifest artifacts. It runs unit tests while building the service, and SonarCloud runs static code analysis against the generated class binaries and publishes docker images to the artifactory (tagged with build_timestamp or git commit hash).

- The pipeline then deploys the image to the dev cluster and runs functional tests for the service and cleans up the test data/Kube cluster. If all functional tests pass, promote to the next level of testing by tagging the image as functional. If the tests fail, the Jenkins pipeline should be configured to roll back to the previous version of the service in the dev cluster and fail the build.

- Automatic deployment to QA environment: QA environment should be configured to pull functional tagged or dev-build-attestor-signed images of all the services by default.

-

-

Promote as integration-ready and deploy to staging environment

-

-

- As mentioned in the previous stage, the QA environment will get the new build automatically after the image is qualified as functional or signed by dev-build-attestor. After checking the successful deployment of service-under-test, the Jenkins pipeline will run integration tests in the QA environment.

- If all the tests pass, the build is promoted to the next level of testing by tagging the image as Integration. If the tests fail, the Jenkins pipeline should be configured to roll back to the previous version of the service in the QA environment and fail the build.

- Finally, the Integration-tagged or qa-build-attestor-signed image of the service-under-test is deployed to staging.

-

-

Promote as prod-ready and deploy to production

-

- As the next step, the staging environment will get the new build automatically after the image is tagged as integration or signed by qa-build-attestor. Automated regression tests are executed in staging. If all the tests pass, promote to production by tagging the image as prod_ready or sign the image by prod-build-attestor.

- Production clusters are configured to pull the prod_ready images or accept images signed by prod-build-attesters, through which new builds will be automatically pulled or deployed by the Jenkins pipeline.

Promotional model workflow for hotfixes

When there are hotfixes that require immediate deployment, we have the option to skip some of the stages and promote the image to production. This is achieved by the Jenkins pipeline’s conditional stages.

Below is a snippet of JenkinsFile that shows how a stage can be skipped based on user input:

Implementation: promotional model

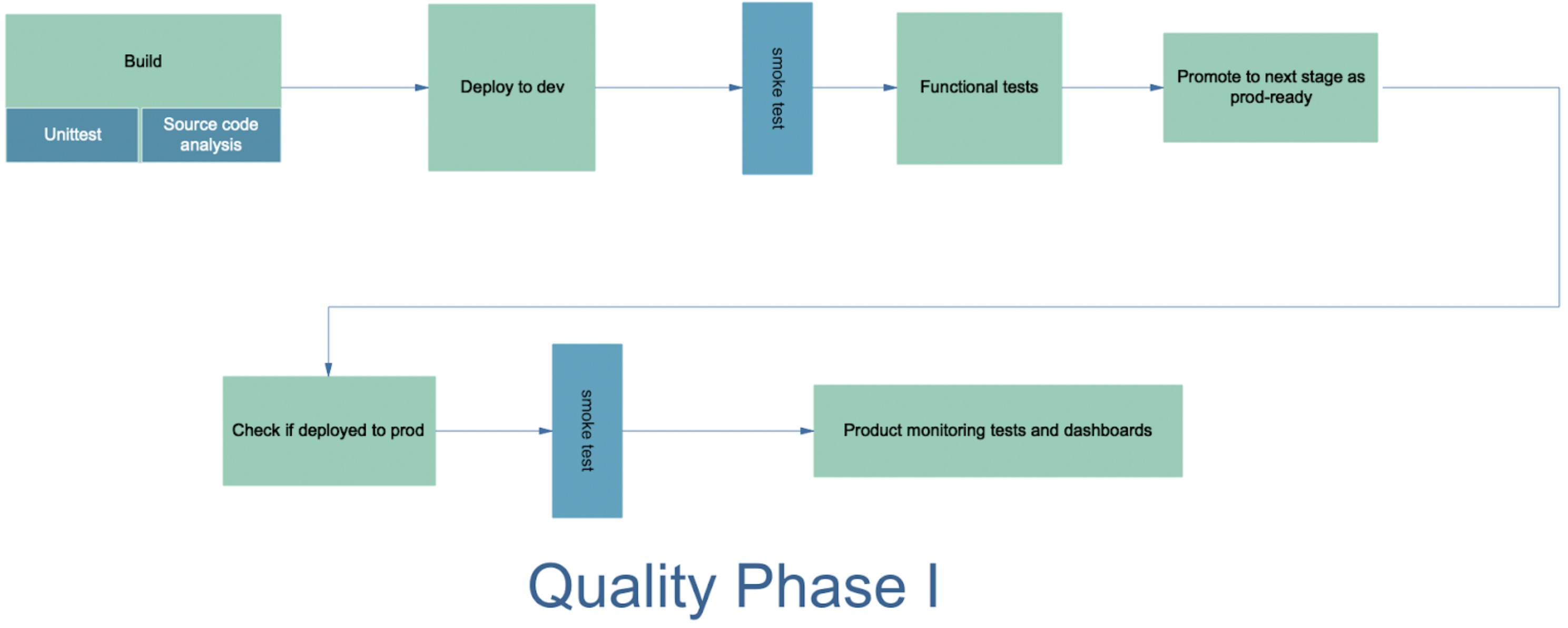

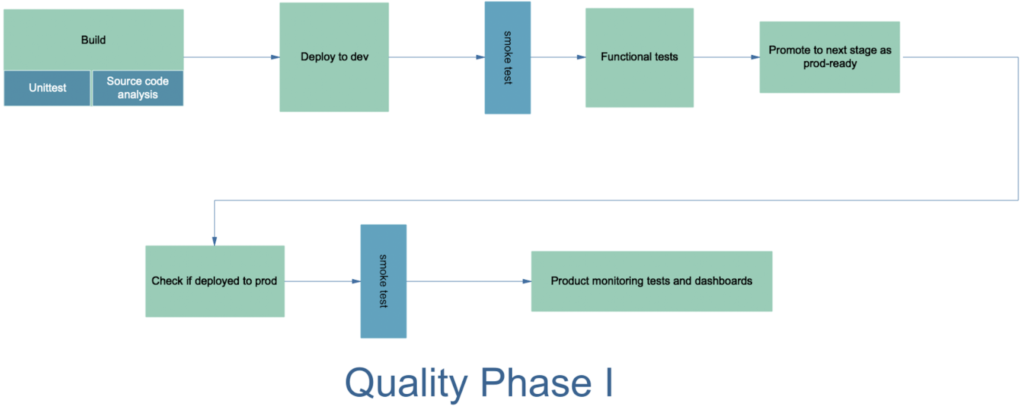

We have currently implemented the testing and promotional stages for unit test, static code analysis, functional tests, and promotion to prod. This is also known as Quality Phase 1 at LiveRamp.

The current implementation uses the stable docker tags after each quality gate as a way to promote a docker image to the next stage. Tagging docker images after each quality gate helps determine the stage of testing. Deployment environments are configured to pull the latest docker image of the respective tags, which helps in the continuous delivery of qualified builds to the destination environments. These are the docker tags currently being used at LiveRamp:

-

- functional :

-

-

- Docker image of the service is tagged functional after successful completion of tests indicating that it is ready to be promoted to the next stage, e.g., integration tests

-

-

-

- QA clusters should be configured to pull “functional” tagged docker images of all the services to run integration tests

-

-

- integration:

-

-

- Docker image of the service is tagged integration after successful completion of tests indicating that it has passed all the integration requirements and is ready to be promoted to the next stage of testing, e.g., regression or end-to-end tests

-

-

-

- Staging clusters should be configured to pull integration-tagged docker images of the service-under-test to run regression or end-to-end tests while other services should be running their production version, thus ensuring version compatibility before production deployment

-

-

- prod_ready:

-

-

- Docker image of the service is tagged prod_ready after successful completion of regression or end-to-end tests indicating that its ready to be deployed to production

-

-

-

- Production clusters should be configured to pull a prod_ready-tagged docker image of all the services

-

Overall, docker tagging is good for faster deployments to development and for environments where changes are constantly pushed, but it has its limitations, as any privileged user could still bypass the process and deploy to production. Also, custom stable tags are not descriptive enough to map with git commit or build number. Revoking a specific commit is harder when images have custom stable tags.

Next steps

As the next step of improvements, we will be looking to implement Google Binary Authorization as part of the strategy to promote production setup. By enabling binary authorization for each deployment environment, we can be sure that only qualified trusted images are deployed.

Proposed Google Binary Authorization flow:

-

- QA clusters should be configured to accept only the images that are signed by dev-build-attestor after successful completion of functional tests

- Staging clusters should be configured to accept the new image of the service-under-test that is signed by qa-build-attestor after successful completion of integration tests and the production version of other services signed by prod-build-attestor

- Production clusters should be configured to accept only the images that are signed by the prod-build-attestor after successful completion of all the testing stages.

- QA clusters should be configured to accept only the images that are signed by dev-build-attestor after successful completion of functional tests

Rollback in promotional model

Another improvement we are looking for is to include the rollback stage when the deployment fails. This will result in additional rollback stages in the promotional model:

- Roll back to a previous stable state of the service under test when the quality gates fail

- Rollback testing to make sure the service is capable of rolling back to its previous version and is backward compatible; this would be an additional stage in the environment before promoting to prod-ready

Appendix

Technical background

JenkinsFile

-

- Jenkins pipelines can be automated through declarative and scripted pipeline syntax that defines the steps involved in the pipelines

- JenkinsFile is used to define the Jenkins pipeline stages that can be checked into source control repository

- For more details: https://www.jenkins.io/doc/book/pipeline/jenkinsfile/

Jenkins promote plugin

-

- Jenkins provides a promote/abort action for users to perform in case of manual promotion, i.e., when all the stages are not yet implemented, we might have to promote manually to proceed with deployment to the next environment

- Only permitted users can promote to the next stage manually

- The below diagram shows the Jenkins promote plugin pop-up window to process or abort the pipeline when there is a missing stage

-

- In the below diagram, build #1 shows that the user decided to proceed with the pipeline and the build got promoted to prod; in build #2, the user has aborted prod promotion.

Docker tags

Docker tags are a way to refer to an image and provide useful information about it.

Whenever an image does not have an explicit tag, it’s given the latest tag by default, but it becomes hard to gather information on the image being deployed when using this tag. Hence, semantic versioning or stable tags helps define the state of a docker image.

Stable tags mean a developer or a build system can pull a specific tag, which continues to get updates. Stable doesn’t mean the contents are frozen, rather it implies the image should be stable, for the intent of that version.

In a CI/CD pipeline, we can define custom stable tags after each quality gate so the build system can promote the image to the next stage of testing. We will discuss more in our practice on how docker tags can be implemented in a promotional model.

Google’s Binary Authorization

-

- Google’s Binary Authorization secures the build-deployment process with tighter controls by enforcing signature validation during deployment

- As the security is enforced in the Google Kubernetes Engine, only images signed by trusted authorities will be accepted by the Kube cluster, avoiding accidental deployments

- Binary Authorization supports whitelisting images that do not require attestation, like third-party images

- Binary Authorization integrates with Cloud Audit Logging to record failed pod creation attempts for later review

- Breakglass annotation allows pod creation even if images violate policy, and the audit logs can be monitored to review these deployments

XXX-build-attestor

As described in the Google Binary Authorization section, an attestor is used to verify the attestation. For our internal build attestation, we are naming it “<env>-build-attestor” based on the environment the build gets deployed, e.g., dev-build-attestor for development environment, qa-build-attestor for qa environment, and prod-build-attestor for prod environment so that different attestation policies can be applied for the respective environments.